AMD EPYC vs Intel Xeon: Best Server CPU Comparison

Server processors are vital for today’s data centres. AMD EPYC and Intel Xeon processors are some of the top competitors in this market. The global server CPU market hit $74.5 billion in 2023 and is expected to grow to $114.6 billion by 2032.

The type of processor influences app performance, power consumption, and total costs. Each architecture has unique advantages for specific workloads. You should consider core counts, memory bandwidth, I/O capabilities, and software compatibility.

This guide examines the technical differences between AMD EPYC and Intel Xeon processors. We cover performance benchmarks, features, and costs.

#What is AMD EPYC?

AMD EPYC is AMD's server processor line based on the Zen microarchitecture. These processors use a chiplet design, which combines several CPU dies with a separate I/O die. This design helps AMD scale core counts efficiently and maintain high yields.

EPYC processors have 12 DDR5 memory channels. They offer up to 160 PCIe 5.0 lanes. The Infinity Fabric interconnect connects chiplets with a bandwidth of up to 32 GT/s.

Security features include:

-

Secure Encrypted Virtualization (SEV) for memory encryption

-

Secure Nested Paging (SNP) for guest attestation

These processors target cloud providers, enterprise data centers, and HPC installations.

#What is Intel Xeon?

Intel Xeon processors power enterprise servers with a focus on reliability and broad ecosystem support. The latest 4th and 5th Generation Xeon Scalable processors use Intel 7 process technology with enhanced microarchitecture.

These processors employ a tile-based design in higher core count models. Intel combines compute tiles with I/O tiles through EMIB (Embedded Multi-die Interconnect Bridge) technology. Core counts range from 8 to 60 cores per socket.

Xeon processors include built-in accelerators:

-

Intel AMX: Matrix operations for AI workloads

-

QAT: Cryptography and compression acceleration

-

DLB: Dynamic load balancing

-

DSA: Data streaming acceleration

The platform supports 8 DDR5 channels and up to 112 PCIe 5.0 lanes. Intel positions these processors for diverse workloads, including databases, virtualization, AI inference, and enterprise applications.

High-Performance Dedicated Servers

Deploy custom or pre-built bare metal servers with enterprise-grade hardware, full root access, and transparent pricing. Instant provisioning, flexible billing, and 24/7 expert support.

#AMD EPYC vs Intel Xeon

AMD and Intel server processors take fundamentally different approaches to achieving performance leadership. This section examines their technical differences and performance, compares features and costs, and identifies optimal use cases for each platform.

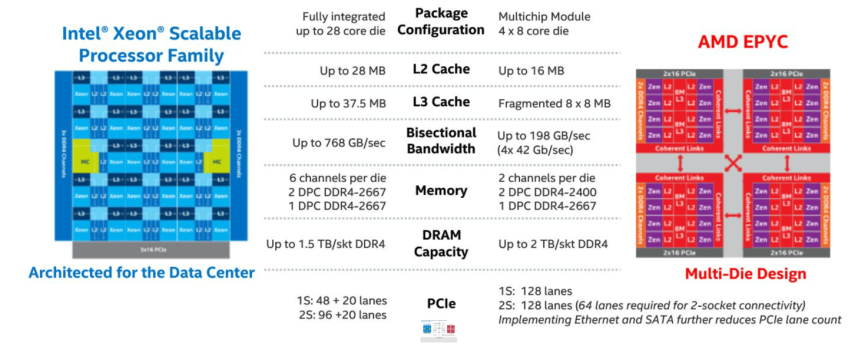

#Key technical differences

AMD EPYC and Intel Xeon use different architectures, each with unique benefits based on the workload. AMD has changed server CPU manufacturing with its modular chiplet design. Intel still uses a traditional layout, but it has been improved with new packaging technology.

AMD's chiplet architecture separates the processor into multiple components. The EPYC 9004 series features up to 12 Core Complex Dies (CCDs) made with TSMC's 5nm process. It also has a centralized I/O Die (IOD) built on 6nm. The EPYC approach offers several advantages:

-

Higher manufacturing yields on smaller dies

-

Independent optimization of compute and I/O components

-

Cost-effective scaling to high core counts

-

Flexibility to mix different CCD types (standard vs. dense cores)

Each CCD contains 8 Zen 4 cores and 32MB of shared L3 cache. The IOD houses memory controllers, PCIe controllers, and the Infinity Fabric interconnect that links all components at speeds up to 32 GT/s.

Intel's 4th and 5th-generation Xeon processors use different approaches based on core count. Lower core count stock keeping units (SKUs) employ monolithic dies manufactured using Intel 7 process technology. Higher core count models utilize Intel's advanced packaging with key characteristics:

-

Multiple tiles connected through EMIB (Embedded Multi-die Interconnect Bridge)

-

Compute tiles contain cores and cache

-

Separate I/O tiles handle memory and PCIe connectivity

-

Tighter integration between components than AMD's approach

| Technical Specification | AMD EPYC 9004 Series | Intel Xeon 4th/5th Gen |

|---|---|---|

| Manufacturing Node | TSMC 5 nm (CCD) / 6 nm (IOD) | Intel 7 (10 nm Enhanced) |

| Architecture | Zen 4 | Golden Cove / Raptor Cove |

| Maximum Cores | 128 (Bergamo) / 96 (Genoa) | 60 (Platinum 8490H) |

| Maximum Threads | 256 / 192 | 120 |

| Memory Channels | 12 × DDR5 | 8 × DDR5 |

| Maximum Memory Speed | DDR5-4800 | DDR5-4800 / DDR5-5600 |

| Memory Capacity | 6 TB (12 × 512 GB) | 4 TB (8 × 512 GB) |

| PCIe Generation | PCIe 5.0 | PCIe 5.0 |

| PCIe Lanes | 128 (1S) / 160 (2S) | 80 (1S) / 112 (2S) |

| L3 Cache | Up to 384 MB / 1152 MB (X3D) | Up to 112.5 MB |

| Base TDP | 200 W – 400 W | 150 W – 350 W |

The memory subsystem represents a critical differentiation point. EPYC's 12 memory channels provide 460.8 GB/s of theoretical bandwidth with DDR5-4800, delivering 50% more bandwidth than Xeon's 8-channel design.

Key memory advantages include:

-

Superior bandwidth for memory-intensive applications

-

Better memory capacity scaling with more DIMM slots

-

Consistent latency across all cores to memory controllers

-

Support for memory interleaving across all channels

Cache hierarchy differs substantially between platforms. EPYC processors feature:

-

1MB L2 cache per core (private)

-

32MB L3 cache per CCD (shared among 8 cores)

-

Optional 3D V-Cache adding 64MB per CCD

-

Total L3 up to 1152MB in X3D variants

Intel Xeon processors provide:

-

Large shared L3 cache across all cores

-

Lower latency for cache hits

-

Up to 112.5MB total L3 cache

#Performance comparison

Performance characteristics change a lot depending on workload needs. Each platform shows strengths in different areas. Comprehensive testing across industry-standard benchmarks reveals the nuanced performance landscape.

Integer performance, measured by SPECrate2017_int_base, demonstrates EPYC's advantage in highly parallel workloads:

-

EPYC 9754 (128-core): 2,050 points

-

EPYC 9654 (96-core): 1,790 points

-

Xeon 8490H (60-core): 1,020 points

-

Xeon 8480+ (56-core): 952 points

These results apply to real-world uses such as web serving, compilation, and general computing. Per-core performance is competitive across platforms. However, EPYC’s higher core counts provide better overall throughput.

Database performance tells a more complex story. In TPC-C online transaction processing benchmarks, performance leaders include:

-

Dual EPYC 9654: 30.5 million tpmC

-

Dual Xeon 8480+: 28.1 million tpmC

-

Single EPYC 9654: 17.2 million tpmC

-

Single Xeon 8490H: 15.8 million tpmC

The 8.5% advantage for EPYC stems from superior memory bandwidth feeding data-hungry database operations. However, Intel's architectural advantages benefit specific database operations:

-

Lower memory latency aids small random transactions

-

AVX-512 accelerates analytical queries

-

Better branch prediction for complex stored procedures

-

Optimized code paths in commercial databases

High-Performance Computing workloads showcase EPYC's memory bandwidth advantage. Scientific applications see substantial improvements:

-

Weather modeling (WRF): 35% faster on EPYC

-

Computational fluid dynamics: 25-40% improvement

-

Molecular dynamics: 30% reduction in time-to-solution

-

Finite element analysis: 20-30% performance gain

Artificial Intelligence and Machine Learning present interesting trade-offs. For training large models, EPYC advantages include:

-

50% larger batch sizes due to memory capacity

-

Faster data preprocessing with more cores

-

Superior gradient computation throughput

-

Better multi-GPU scaling with more PCIe lanes

Intel's Advanced Matrix Extensions (AMX) provide significant inference acceleration:

-

Up to 8x speedup for INT8 operations

-

3-4x improvement for bfloat16 inference

-

Native support in major frameworks

-

Lower latency for real-time inference

Virtualization density benchmarks from VMware VMmark reveal:

-

EPYC 9754: Supports 2,048 lightweight VMs

-

Xeon 8490H: Supports 1,280 lightweight VMs

-

EPYC 9654: 1,536 VMs with 35% better consolidation

-

Xeon 8480+: 1,024 VMs with consistent performance

Power efficiency varies by workload intensity. The SPECpower_ssj2008 benchmark shows:

-

EPYC 9654: 15,234 operations per watt

-

Xeon 8480+: 13,876 operations per watt

-

EPYC advantage: 10-15% at full load

-

Intel advantage: 8-12% at idle

#Feature comparison

Enterprise features extend beyond raw performance, encompassing security, reliability, and manageability capabilities that ensure stable operation in production environments.

Security implementations reflect different approaches. AMD's Infinity Guard security suite provides comprehensive protection:

-

SEV (Secure Encrypted Virtualization): Encrypts VM memory with unique keys

-

SEV-ES: Extends encryption to CPU register state

-

SEV-SNP: Adds remote attestation for verification

-

Memory Guard: Protects against cold boot attacks

-

Secure Boot: Validates firmware integrity

Intel's security portfolio includes advanced features requiring different implementation approaches:

-

SGX (Software Guard Extensions): Creates encrypted application enclaves

-

TDX (Trust Domain Extensions): Confidential VM technology

-

Total Memory Encryption: Full physical memory encryption

-

Boot Guard: Hardware-verified boot process

-

Control-flow Enforcement Technology: Prevents code-reuse attacks

| Security Feature | AMD EPYC | Intel Xeon | Use Case |

|---|---|---|---|

| VM Memory Encryption | SEV / SEV-ES / SEV-SNP | TDX | Multi-tenant clouds |

| Application Enclaves | Memory Guard | SGX | Sensitive data processing |

| Boot Security | Secure Boot | Boot Guard | Firmware protection |

| Side-channel Mitigation | Hardware fixes | Hardware + microcode | Spectre / Meltdown protection |

Reliability, Availability, and Serviceability (RAS) features ensure continuous operation. Both platforms support essential capabilities:

-

ECC memory with single-bit correction, double-bit detection

-

Memory patrol scrubbing to prevent error accumulation

-

PCIe Advanced Error Reporting (AER)

-

Machine Check Architecture (MCA) for error logging

-

Predictive failure analysis for proactive maintenance

Platform-specific RAS advantages include:

EPYC RAS Features:

-

Memory rank sparing for failed DIMM isolation

-

Address range partial mirroring

-

DRAM row hammer mitigation

-

Infinity Fabric error detection and correction

Intel Xeon RAS Features:

-

Full memory mirroring capability

-

ADDDC (Adaptive Double Device Data Correction)

-

Intel Memory Failure Recovery

-

Platform Level Data Model for telemetry

Management capabilities showcase ecosystem maturity differences:

-

Intel platforms: Comprehensive BMC integrations, Intel Node Manager, extensive third-party tool support

-

AMD platforms: Industry-standard Redfish API, IPMI 2.0, growing ecosystem support

-

Both support: Remote KVM, virtual media, power capping, thermal monitoring

#Cost analysis

Total Cost of Ownership (TCO) calculations must consider multiple factors beyond processor pricing. A comprehensive analysis reveals the true infrastructure investment required.

| Configuration | AMD EPYC Option | Street Price | Intel Xeon Option | Street Price | Price Delta |

|---|---|---|---|---|---|

| 32-core | EPYC 9354 | $2,900 | Xeon Platinum 8462Y+ | $3,400 | -15% |

| 48-core | EPYC 9454 | $4,600 | Xeon Platinum 8468 | $5,200 | -12% |

| 64-core | EPYC 9554 | $7,100 | Xeon Platinum 8490H (60c) | $8,900 | -20% |

| 96-core | EPYC 9654 | $11,800 | 2 × Xeon 8468 (96c total) | $10,400 | +13% |

Platform costs add complexity to TCO calculations:

-

EPYC motherboards: $1,200-$2,000 (12 channel support)

-

Xeon motherboards: $800-$1,500 (8-channel support)

-

Memory costs: Similar per GB, but EPYC needs 50% more DIMMs

-

Cooling solutions: Higher TDP EPYC may require enhanced cooling

Operational expenses significantly impact three-year TCO:

Power Consumption Analysis (70% average load):

-

Dual EPYC 9654: 650W average, $1,707 over 3 years

-

Dual Xeon 8480+: 580W average, $1,524 over 3 years

-

Power Usage Effectiveness (PUE) multiplies these costs by 1.5-2.0

-

Cooling infrastructure adds $0.50-$1.00 per power dollar

Software licensing creates the largest TCO variables:

-

VMware vSphere 8: $225 per core

-

Oracle Database Enterprise: $47,500 per core

-

Microsoft SQL Server Enterprise: $7,128 per core

-

Red Hat Enterprise Linux: $349-$1,299 per socket (platform neutral)

Open-source software stacks benefit most from EPYC's higher core counts:

-

30-40% lower TCO for Kubernetes deployments

-

Superior price/performance for PostgreSQL

-

Cost-effective scaling for Apache Spark clusters

-

Reduced infrastructure footprint for microservices

#Use case scenarios

Workload characteristics determine optimal processor selection, with clear winners emerging for specific use cases based on architectural strengths.

#Cloud service provider infrastructure

Major cloud providers prioritize VM density and operational efficiency. EPYC advantages in this space include:

-

30% higher container density on 128-core models

-

SEV-SNP enables confidential computing services

-

Superior memory bandwidth for object storage

-

More PCIe lanes for NVMe arrays and network cards

-

Lower cost per virtual CPU for customer billing

Validated deployments include AWS EC2, Azure Virtual Machines, and Google Compute Engine instances using EPYC processors extensively.

#Enterprise database deployments

Traditional databases show platform preferences based on optimization history:

Intel Xeon advantages:

-

SQL Server columnstore indexes leverage AVX-512

-

SAP HANA certified configurations are widely available

-

Mature ecosystem with proven deployment patterns

AMD EPYC advantages:

-

PostgreSQL scales linearly with core count

-

MongoDB benefits from memory bandwidth

-

Redis in-memory operations run faster

-

Cassandra distributed deployments cost less

#High-performance computing

Scientific computing workloads demonstrate clear platform preferences:

Memory bandwidth-sensitive (favor EPYC):

-

Computational Fluid Dynamics (OpenFOAM)

-

Weather modeling (WRF)

-

Molecular dynamics (GROMACS)

-

Seismic processing applications

Compute-optimized (may favor Intel):

-

MATLAB with Intel MKL libraries

-

Financial modeling using AVX-512

-

Signal processing applications

-

Some AI inference workloads

#Virtualization and private clouds

Platform selection impacts virtualization deployments differently:

-

VMware vSphere: Intel's optimizations vs. EPYC's density

-

OpenStack: Platform neutral, scales with resources

-

Kubernetes: Benefits from EPYC's core count

-

Proxmox: Excellent support for both platforms

#Conclusion

AMD EPYC is great for parallel tasks requiring high memory bandwidth and many cores. Intel Xeon shines in single-threaded tasks and has strong software support. Choose based on your workload needs, licensing options, and total cost of ownership (TCO) analysis.

Ready to test both platforms? Deploy AMD EPYC or Intel Xeon bare metal servers at Cherry Servers with flexible hourly billing. Compare real-world performance for your applications today.

Starting at just $3.24 / month, get virtual servers with top-tier performance.