A Complete Overview of Docker Architecture

Used by over 8 million developers and with over 13 billion monthly image downloads, Docker is one of the most popular containerization platforms. It takes away repetitive and time-consuming configuration tasks and enhances fast and efficient development of applications both on desktop and cloud environments.

To get comfortable with Docker, you need to, first, get a clear understanding of its architecture and its underpinnings. In this guide, we will explore Docker’s architecture and see how various components work and interact with each other.

#Docker Containers vs Virtual Machines

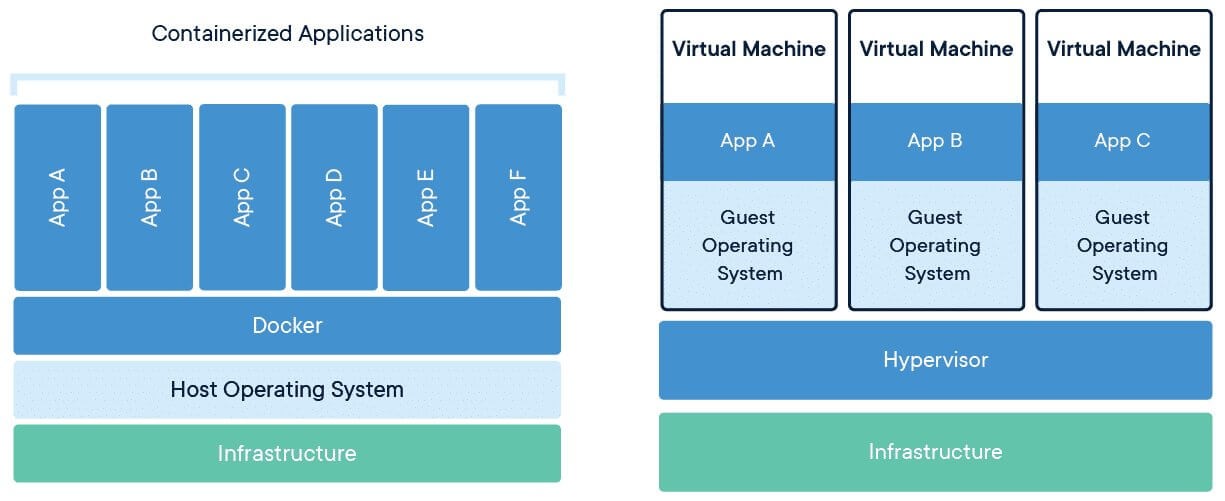

Let’s start off by drawing a comparison between Containers and Virtual Machines.

#Docker Containers

Containers are essentially portable and fully-packaged computing environments that run in complete isolation of the host system. Containers package together everything you need to run an application, including its code, system libraries, dependencies, and configuration settings in a standalone unit of software.

Since containers bundle all the essential components needed by the application to run smoothly, software applications can be moved and run on any computing platform without inconsistencies or errors. Such standardization is one of the biggest advantages of Docker.

Unlike virtual machines, containers do not virtualize hardware resources. Instead, they run on top of a container runtime platform that abstracts the resources. In Docker, the de facto container runtime platform is the Docker Engine.

Containers are lightweight and faster than virtual machines. Such swiftness comes from the fact that containers share the host’s operating system kernel, eliminating the need for a separate operating system for each container.

Ready to supercharge your Docker infrastructure? Scale effortlessly and enjoy flexible storage with Cherry Servers bare metal or virtual servers. Eliminate infrastructure headaches with free 24/7 technical support, pay-as-you-go pricing, and global availability.

#Virtual Machines

Virtualization technology uses a hypervisor, an application that creates a virtualization or abstraction layer that emulates the available hardware resources including the RAM, CPU, USB ports, NICS, and many more. This makes it possible for guests or virtual machines to use them.

Virtual machines are virtualized OS instances that are isolated and run independently of the host system. They emulate the hardware components of the host machine such as CPU, RAM, network interfaces, and storage to mention a few.

Since virtual machines utilize the same resources used by the host machine, this may result in high resource overhead which can impact performance.

VMs are much larger than containers since they contain an operating system and are typically measured in Gigabytes. This also goes back to high resource utilization. Containers are much smaller and can take as low as a few megabytes of storage.

#Containers Benefits

Both containers and virtual machines provide a level of isolation from the host system, and it's easy to get confused between the two technologies. However, the difference boils down to this:

Virtualization is about abstracting hardware resources on the host system while containerization is about abstracting the operating system kernel and running an application with all the essential components inside an isolated unit.

That said, containerization is the go-to solution when building and deploying applications for the following reasons:

Portability - With containers being small and lightweight, they can seamlessly be deployed on any platform with ease.

Simple deployment - Because containers contain all that an app needs to run, it becomes easy to deploy them since the software will run the same way on any computing environment.

Speed - Containers usually take seconds to start compared to virtual machines which generally take minutes to boot. And while boot times might vary depending on the size of the app, containers only take a tiny fraction of the virtual machines’ boot time to start up.

Resource Optimization - Since containers have a small memory footprint and do not abstract hardware resources, they are more economical and result in low resource overhead. This helps developers to efficiently optimize server resources.

Let us now switch gears and explore Docker’s architecture.

#Docker Architecture

The Docker architecture employs a client-server design. A Docker client talks to the Docker daemon via the REST API and the daemon manages the containers according to the commands issued by the user. The REST API is in charge of communication between the client and the daemon.

Let’s take a deeper look at Docker’s architecture; how it runs applications in isolated containers and how it works under the hood.

#Docker Engine

Docker Engine is essentially Docker installed on a host. When you install Docker on a system, you are installing the following key components: the Docker daemon, Docker REST API, and Docker CLI which interacts with the Docker daemon through the REST API.

Let’s take a closer look at each of these components.

Docker Daemon - This is a background process that manages Docker objects such as images, containers, storage volumes, and networks. It constantly listens to API requests and processes them.

Docker REST API - This is an API interface that applications use to talk with the Docker Daemon and provide instructions.

Docker CLI - This is a command line interface for interacting with the Docker daemon. It helps you to perform tasks such as running, stopping, and terminating containers and images to mention a few. It uses the REST API to interact with the Docker daemon.

The Docker CLI is a client tool and does not necessarily have to be on the same host as the rest of the other components. It can be on a remote system such as a laptop and still communicate with a remote Docker engine.

To communicate with a remote Docker engine by using Docker CLI, pass the -H flag followed by an IP address of the remote host and a port number as shown in the syntax below:

docker -H=remote-host-ip:port-number

For example, to run an Apache container on a remote Docker engine whose IP is 10.10.2.1, run the following command:

docker -H=10.10.2.1:2375 run httpd

#Docker Client

Docker client is a gateway or command-line interface (CLI) that allows you to interact with Docker daemon via commands and the REST API. It is the primary way that users interact with the Docker engine.

The client allows you to pull container images from a registry and manage them by issuing commands such as running, stopping, and terminating containers.

Common commands issued by Docker clients include:

docker pull

docker run

docker build

docker stop

docker rm

#Docker Objects

Let us now focus on Docker’s objects.

#Images

Docker images are the building blocks for containers. A Docker image is an immutable or read-only template that provides instructions for a Docker container. It contains libraries, dependencies, source code, and other files required for an application to run. An image contains metadata that provides more information about the image.

Docker images are built in YAML and are used to store and ship applications. An image can be used to build a container or modified to include additional elements to the current configuration. Images can be shared across teams or hosted as a file on an organization’s private registry or public registry such as Docker Hub.

#Containers

A docker container is a lightweight, standalone executable package of an application. It packs everything needed to run the application: source code, libraries, dependencies, runtime, system tools, and settings.

Containers are built using Docker images using the docker run command. Consider a basic scenario of running an Nginx container on a Docker host as follows:

docker run nginx

In case the Nginx image is not locally present on your system, Docker will pull the image from the Docker registry. Once the image is pulled, a new Docker Nginx container will be created from the image and will be made available within your environment.

Since a container is built on an image, it has access to the underlying resources that are defined by the image. Also, you can create a new image based on the current container's state.

Since containers are inherently lightweight, they can be created in a matter of seconds with low resource overhead. This is one of the areas where containers have an edge over virtual machines.

#Networking

Docker networking is a subsystem in which Docker containers connect with other containers either on the same host or on different hosts, including internet-facing hosts.

Networking in Docker is made possible using network drivers. There are five main network drivers in Docker:

Bridge: This is the default network driver for containers. If you don't explicitly specify a driver, Docker containers will be launched in this network by default. A bridge network is used when applications run in standalone containers that need to communicate with each other, while providing isolation from other containers that are on different networks.

Host: This is the driver that removes the layer of isolation between Docker containers and the host system. It is used when you want to spin up a container on the same network as the host’s subnet.

Overlay: This driver is used with a Docker Swarm cluster. It enables communication of swarm services or containers running on separate Docker hosts.

Macvlan: This driver is used when you want to assign mac addresses to containers in order to make them look like they are physical devices.

None: This driver disables all the networking services.

#Storage

Data stored in a container is ephemeral, and it ceases to exist once the container is terminated. Docker offers the following options to ensure persistent storage:

Data volumes: These are the most preferred storage systems for persisting data generated and used by containers. Data volumes are essentially filesystems mounted on Docker containers to store data generated by running containers. They are located on the host system outside the container.

Volume Container: Another solution is to have a dedicated container hosting a volume which is then used as a mount space for other containers. This is used as a means of sharing data between multiple containers at the same time. This approach is ideal when backing up data from other containers to a central volume.

Directory Mounts: For data volumes and volume containers, data is stored in the /var/lib/docker/volumes filesystem which is managed purely by Docker. For Directory mounts, data can be stored on any directory on the host system and them be mounted to a container upon build time.

Storage Plugins: Plugins extend the functionality of Docker. Storage plugins provide a means of connecting to external storage devices. They map storage from the host system to an external storage array or a physical device.

#Docker registry

A Docker registry is a repository on which various types of container images are hosted. Docker images are built in YAML language and come in various forms - from OS, and web server images to base images for programming languages such as Python and Go.

The registry is organized into repositories where each repository stores all the versions of a specific image. An image might have numerous versions which can be identified using its name tag.

Docker Hub is Docker’s public registry. It hosts both public and private repositories. Public repositories are accessible to everyone. Private ones restrict access only to the repository’s creator.

Docker Hub also supports official repositories which comprise verified container images that do not bear any username. Examples of such container images include Nginx, Ubuntu, Redis, Node, RabbitMQ, and Python.

By default, Docker Engine interacts with Docker Hub’s public registry. Any docker pull command pulls the container image from the Docker Hub registry. You can also push your own images to the registry provided you have adequate access permissions.

#Conclusion

Docker is a widely used tool in DevOps today. It helps developers to seamlessly build, ship, and deliver applications quickly. For more information about Docker architecture head over to the Docker documentation page.

Starting at just $3.24 / month, get virtual servers with top-tier performance.